Seeing Machines has unveiled its next-generation 3D Cabin Perception Mapping platform, introducing a real-time, scalable approach to understanding people and activity inside vehicles and other enclosed environments.

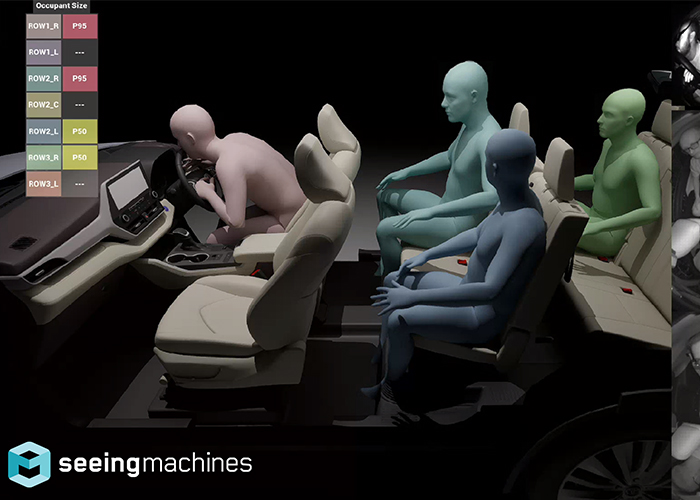

The new architecture delivers a comprehensive digital reconstruction of the interior cabin, enabling continuous, real-time insight into occupants, seating configurations and objects within the space. While exterior perception systems focus on sensing the environment around a vehicle, Seeing Machines’ 3D Cabin Perception Mapping addresses the equally complex challenge of understanding what is happening inside, using a single, unified perception layer.

Built on a clean-sheet architecture, the platform supports multiple cameras, multiple occupants and a wide range of safety and user-experience features simultaneously. By solving perception for the entire cabin rather than relying on separate feature-specific pipelines, the system improves consistency and robustness, maintaining accuracy even when sensor data is intermittent or noisy.

A key advantage of the architecture is its abstraction layer, which decouples application development from specific camera layouts or sensing hardware. This allows features to be developed once and deployed across different vehicle types and configurations, reducing development cost and accelerating deployment timelines. For smart mobility operators, this enables faster rollout of new safety and monitoring capabilities across fleets without extensive redesign.

Seeing Machines said the platform represents a fundamental shift in interior sensing design, enabling higher accuracy and scalability while giving customers flexibility to evolve their feature strategies over time. The unified 3D perception approach also lowers the complexity of adding new capabilities as regulatory, safety and operational requirements change.

Beyond automotive applications, the platform has potential relevance for smart cities, including public transport, shared mobility, autonomous shuttles, robotics and other human–machine interaction environments where accurate perception of people, posture and space is critical. The architecture supports a mix-and-match approach to 3D sensing technologies, allowing deployments to adapt as hardware options and use cases evolve.

In demonstration scenarios, the system showed real-time digital reconstruction of a multi-row vehicle cabin using three cameras, supporting up to seven occupants. Capabilities included full 3D body pose estimation, occupant size and classification, detection of unsafe postures, seat configuration awareness, child seat recognition and identification of loose objects such as bags and mobile devices.

Seeing Machines said the platform reinforces its focus on vision-based safety technologies that support the next generation of intelligent, human-centred mobility systems — a key foundation for safer, more responsive smart city transport networks.